AI-Integrated Creative Practice

This page consolidates a selection of my projects that integrate AI as a core visual workflow—across 3D assets, animation, and image generation—used throughout the full creative pipeline.

AI is applied at multiple stages of my practice:

from early-stage proposal prototyping, to production-level visual construction, and into final compositing and refinement, adapting generative outputs to specific spatial, architectural, and contextual constraints.

Rather than using AI purely for aesthetic generation, my workflow focuses on transforming generative visuals into structurally feasible, site-responsive, and production-ready visual systems—supporting video content, architectural projections, immersive installations, and spatial narratives.

For a complete overview of my video works: https://www.heqiuyao.com/video

For a complete overview of my immersive works: https://www.heqiuyao.com/

AI Animation for Architectural Projection Mapping @ Genius Loci Weimar

A Fully AI-Driven Visual Pipeline | My Role: Creative Lead, Narrative & Visual Design, AI Concept Development, Image & Video Generation, Compositing, Editing, Sound design

Tool: Midjourney, Dreamina, GPT, Kling, Runway, Veo3

All additional on-site video and photographic documentation—including filming and editing—was produced by me and is available here.

https://www.heqiuyao.com/work-4/architecture-projection-mapping-aigenerated

Context & Concept

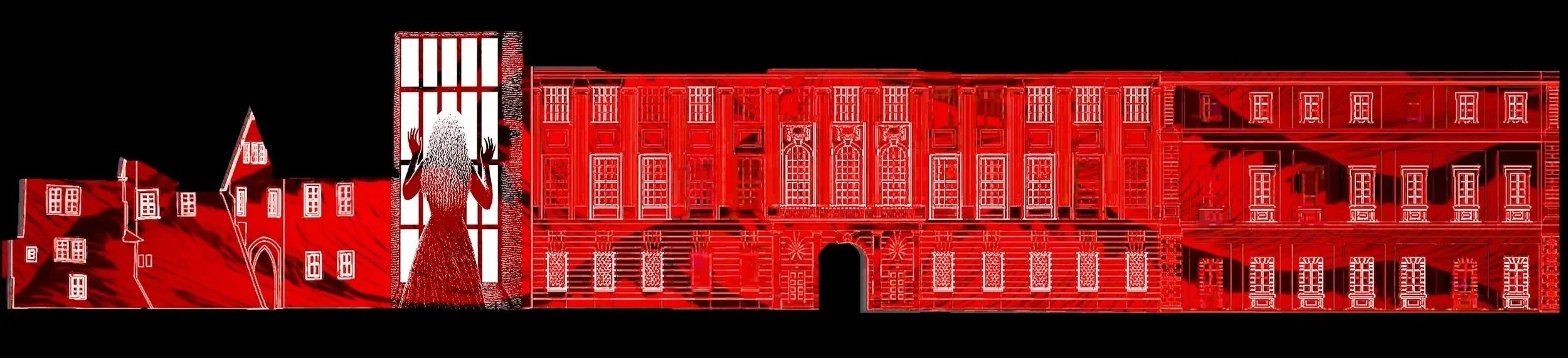

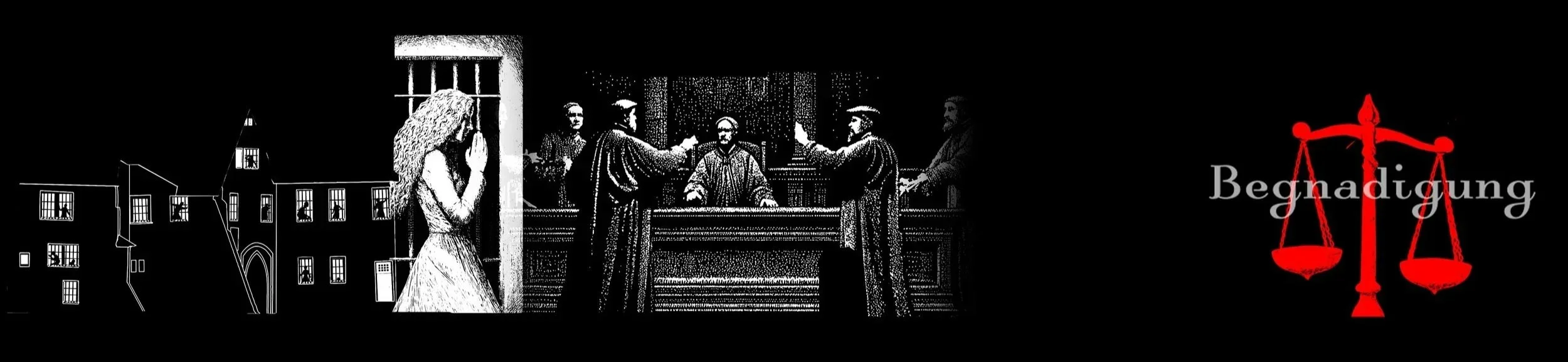

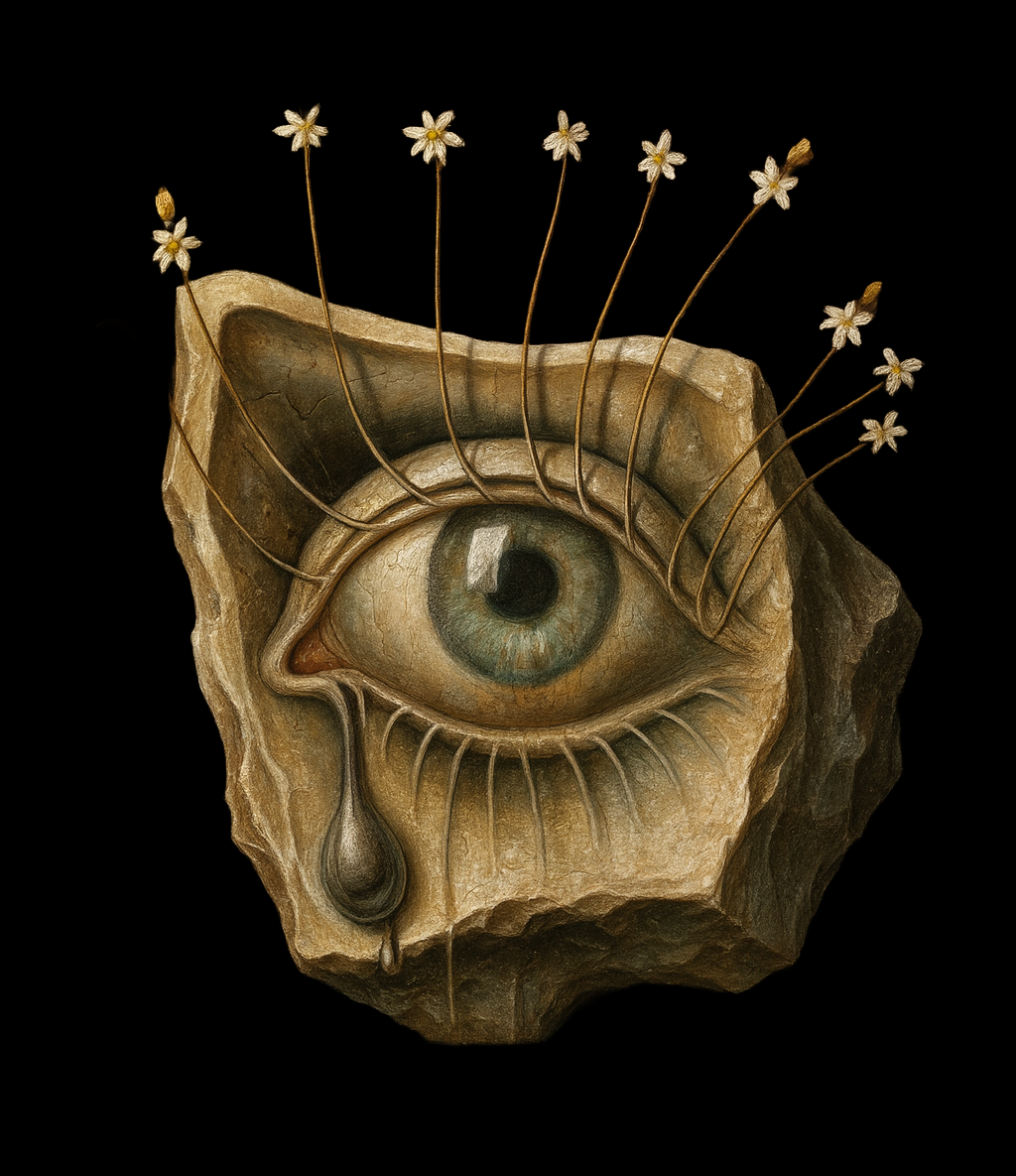

Created for the 250th anniversary of Goethe’s arrival in Weimar, this seven-minute architectural projection on Weimar City Castle re-stages Faust as a public intervention.

The work juxtaposes Goethe’s literary compassion toward Gretchen with his historical role in approving the execution of a woman condemned for the same crime. By exposing this dissonance, the piece culminates in a QR-code interface that invites the public to determine Gretchen’s fate themselves.

Generative Tools & Motion Synthesis

Still imagery and visual concepts were developed using Midjourney, Dreamina, and GPT-based systems.

Video sequences were primarily generated with Kling, with limited use of additional generative platforms. Kling was selected for its ability to maintain stylistic continuity, facial coherence, and motion consistency across longer sequences.

Multi-Layer Composition for Architectural Canvases

Because the façade functions as an extended, non-standard canvas, the projection could not be generated as a single unified image or video.

Each scene was constructed through a layered generative process: multiple AI-generated components were assembled, stitched, and spatially re-aligned to fit the architectural surface. Transitional seams and overlaps were resolved through compositing and refinement.

During storyboarding, AI was already used to generate modular visual segments. These components were assembled into façade-scale mockups—both sectional studies and panoramic strips—allowing the full architectural composition to be pre-visualized and iterated before final synthesis.

AI Photorealism Test

A Fully AI-Driven Visual Pipeline | Spatial continuity test across multiple camera angles.

The video is inspired by a dream: I wanted to enter a swimming pool, but I was not allowed — for a strange reason.

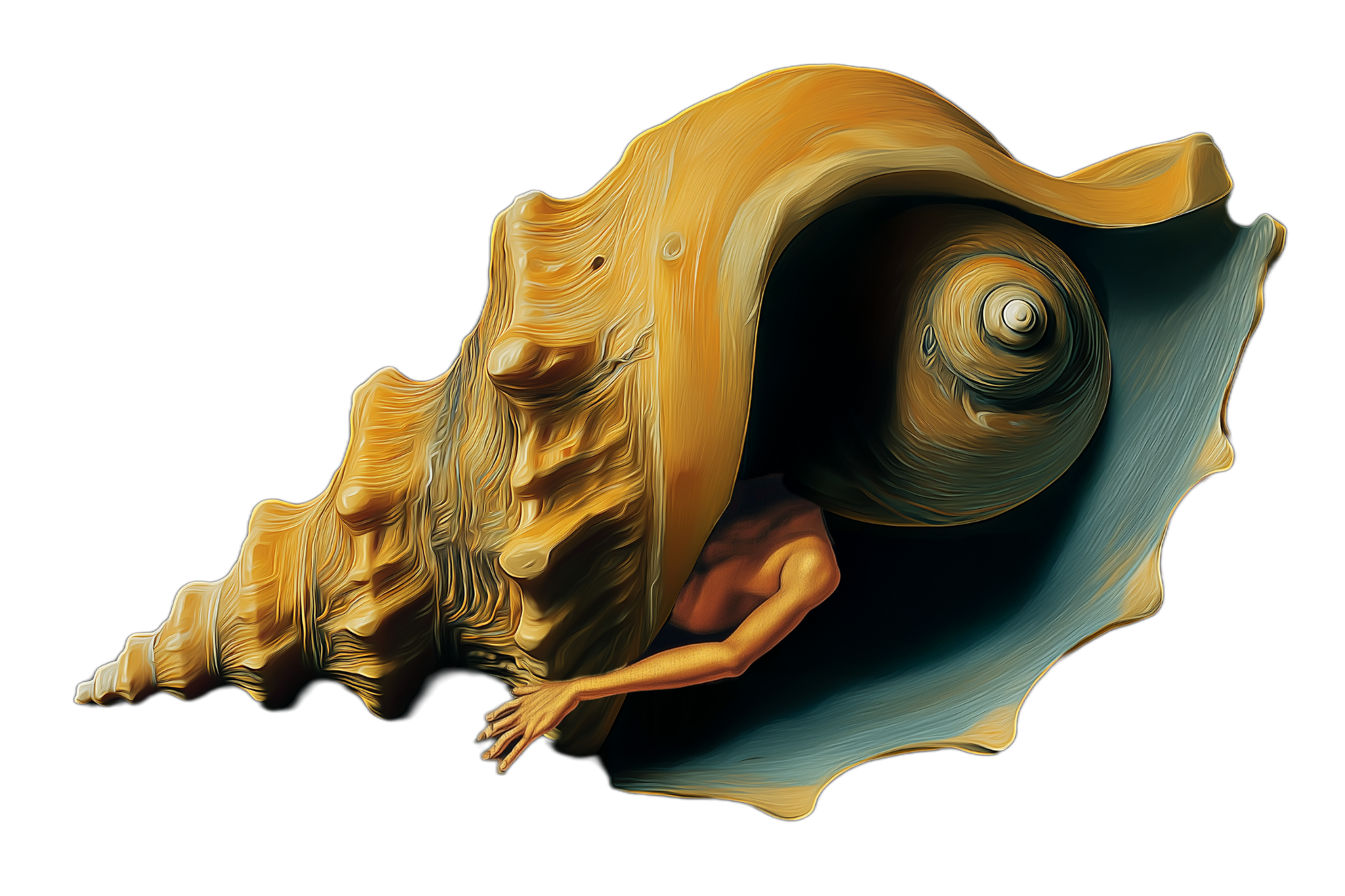

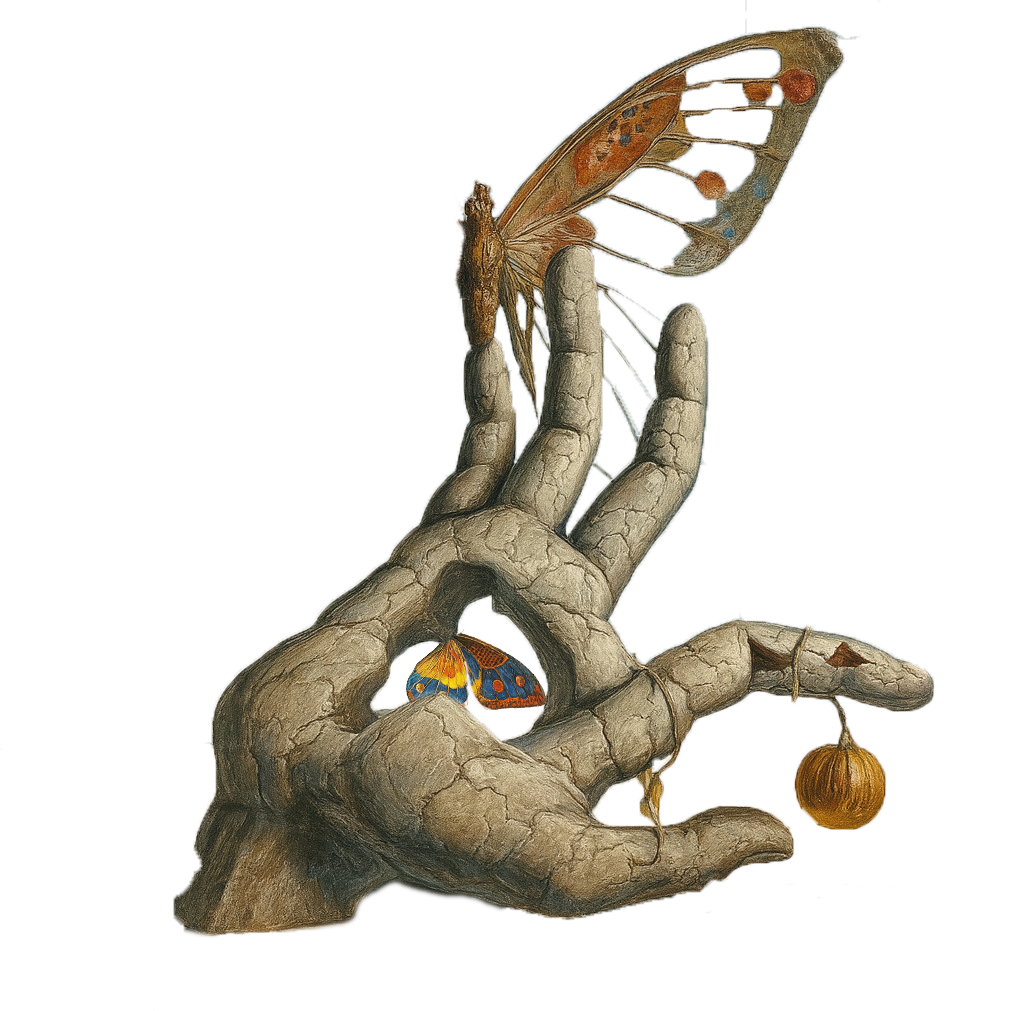

Real-Time AI 3D Assets for Immersive Experience @ Dali Museum

AI-Assisted 3D Creation Pipeline | My Role: Creative lead, visual design, AI image/ 3D generation, Tech Art, Developer

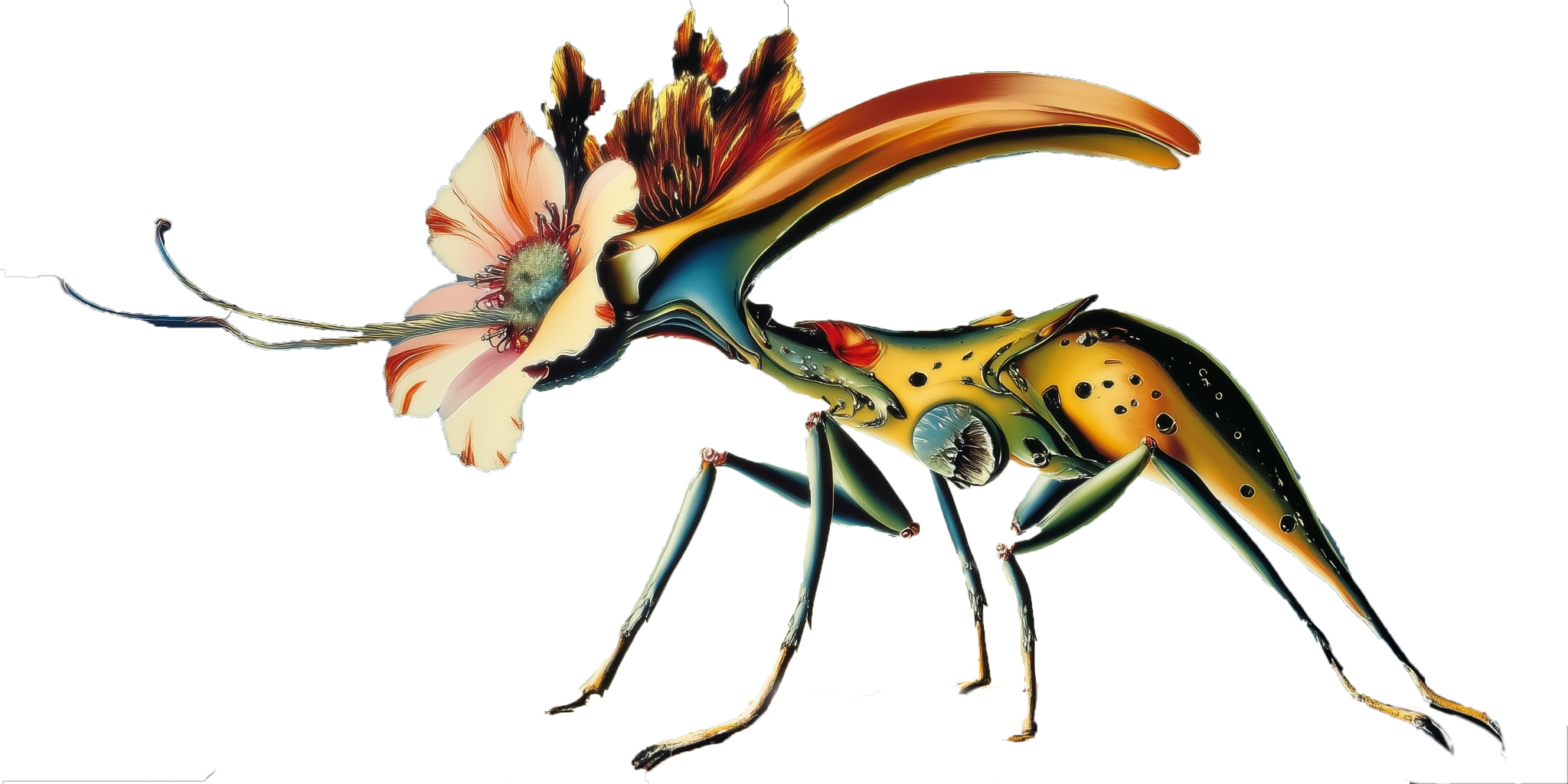

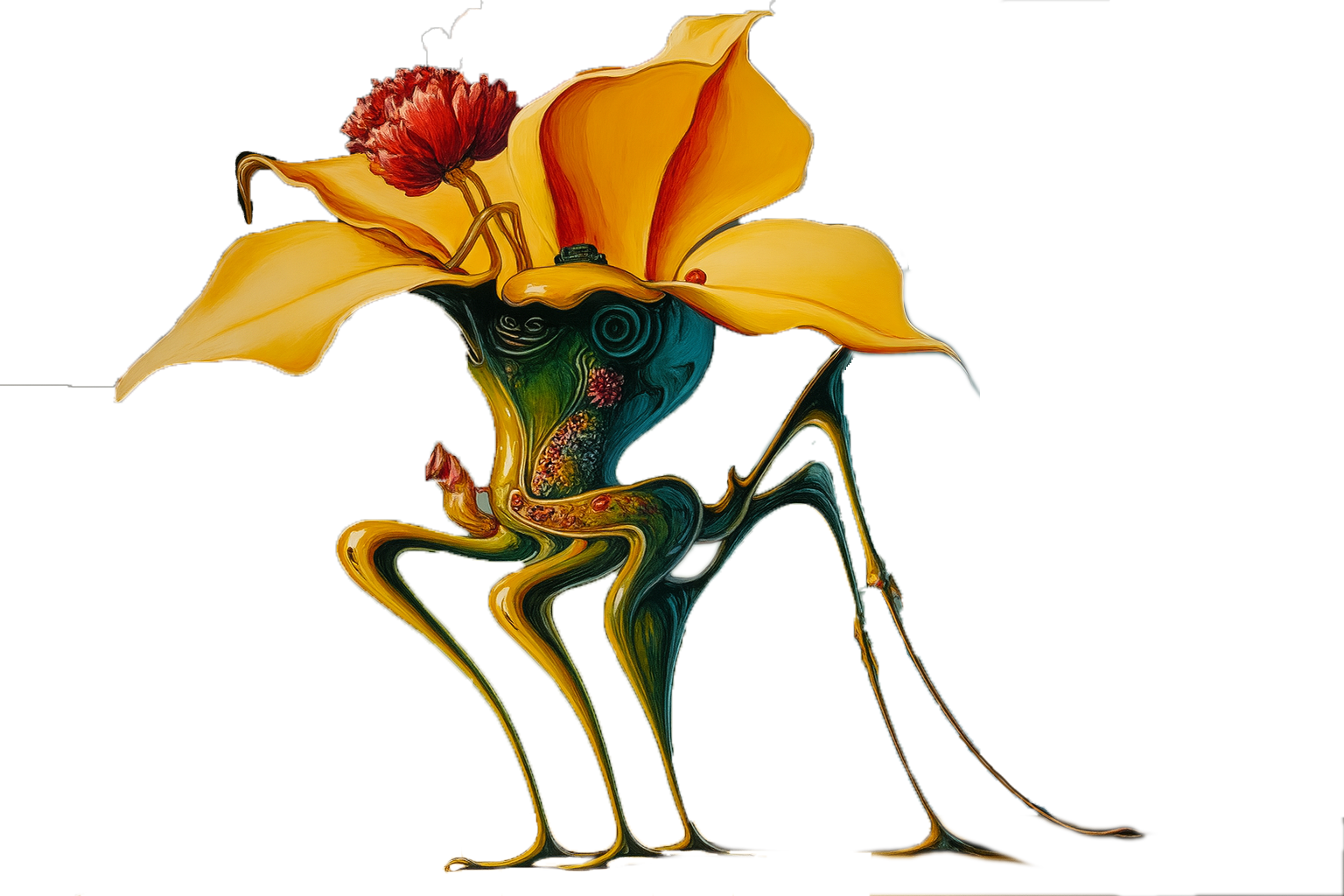

This project uses a AI-assisted pipeline to generate a set of animated 3D assets for a real-time immersive projection environment.

The environment is composed of approximately 30 animated 3D objects.

Most objects are produced through AI-assisted generation, with varying degrees of AI involvement depending on each object’s structural and animation complexity.

Workflow

1. Concept → Modular Object Design

Concept development is carried out through an AI + manual refinement workflow, where animation behavior is considered from the very beginning.

Objects are conceived not as single meshes, but as modular, part-based structures in preparation for later animation.

2. Part-Based 3D Generation

Each object is generated as a set of separate 3D components.

Parts that need to move and parts that remain static are generated independently to support later rigging and animation.

3. AI Texture & Material Synthesis

Some textures are fully AI-generated, while others are manually refined on top of AI-generated bases.

All textures are converted into engine-ready PBR material sets.

4. Rigging & Animation Preparation

Each component is cleaned, optimized, and prepared for rigging, enabling objects to deform, shift, and respond to interaction and projection light in real time.

5. Engine Integration

All animated objects are assembled inside the game engine, where lighting, projection, and interaction systems are applied.

AI-Assisted Proposal Workflow

AI-Assisted Visual Pipeline | My Role: Creative lead, experience design, visual design, AI image/ video generation

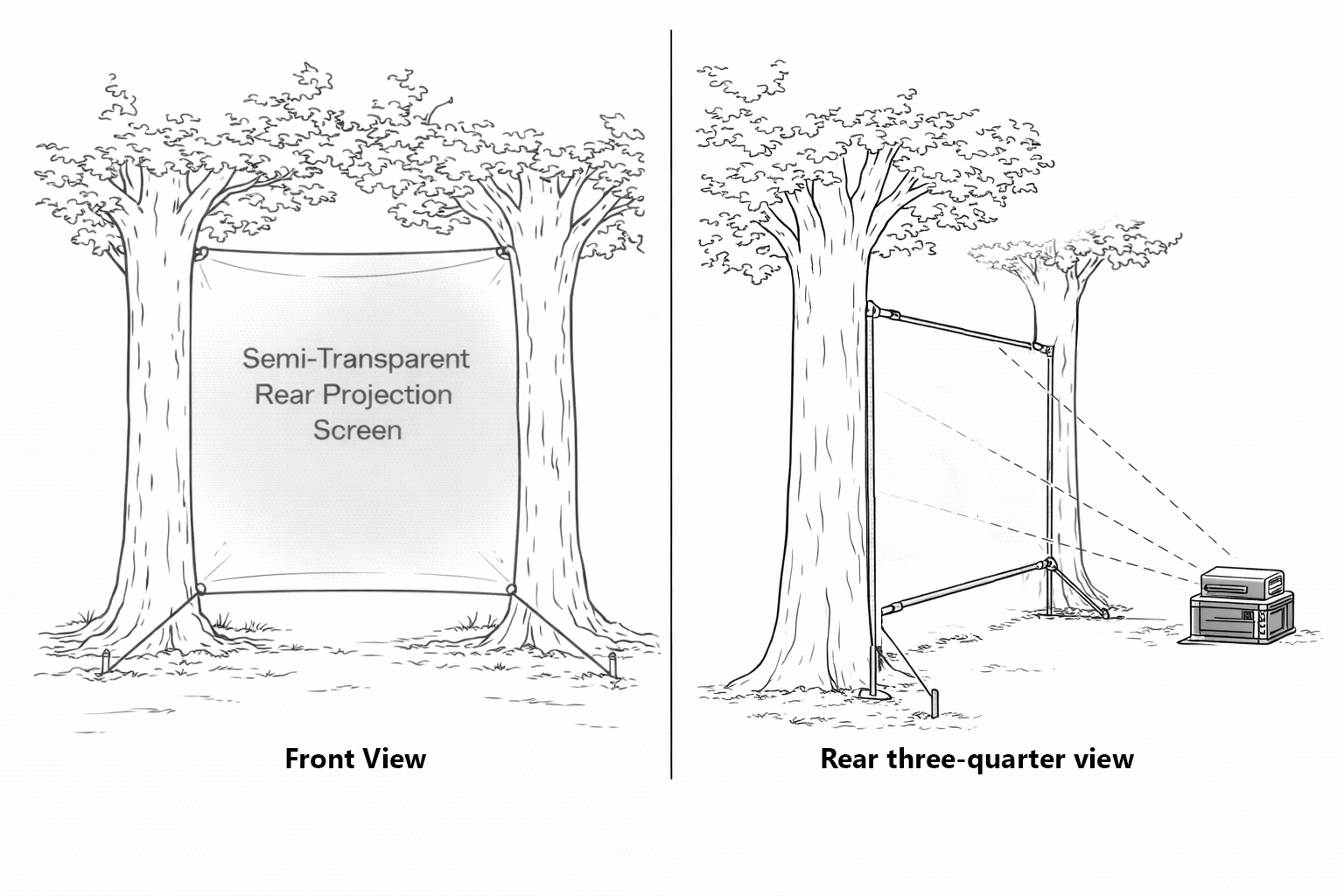

Scope

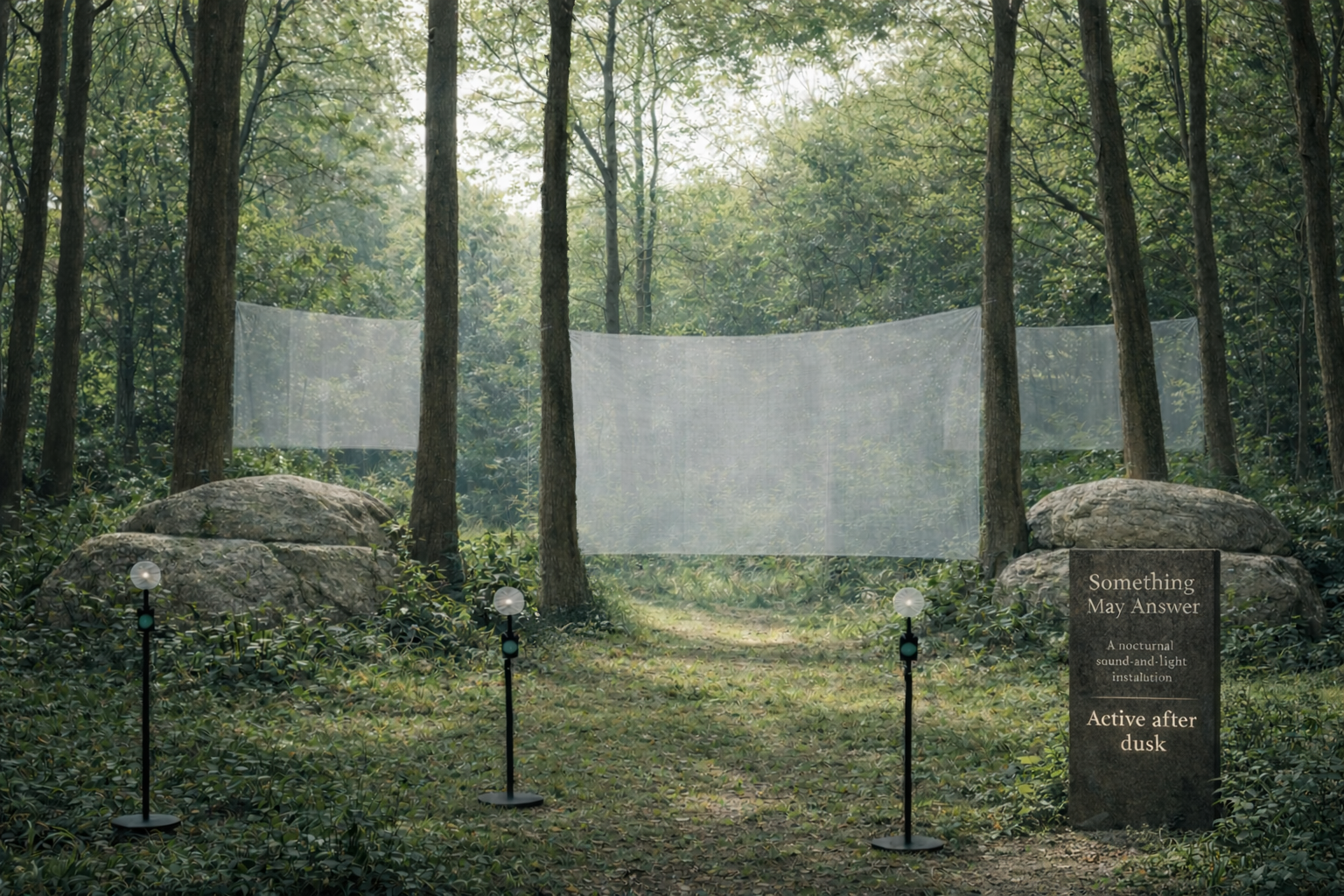

This workflow supports a series of successful proposals spanning architectural projections and public art installations.

All visual prototypes—including images, videos, and motion studies—were developed through AI-assisted generation and refinement.

Hybrid Generative Approach

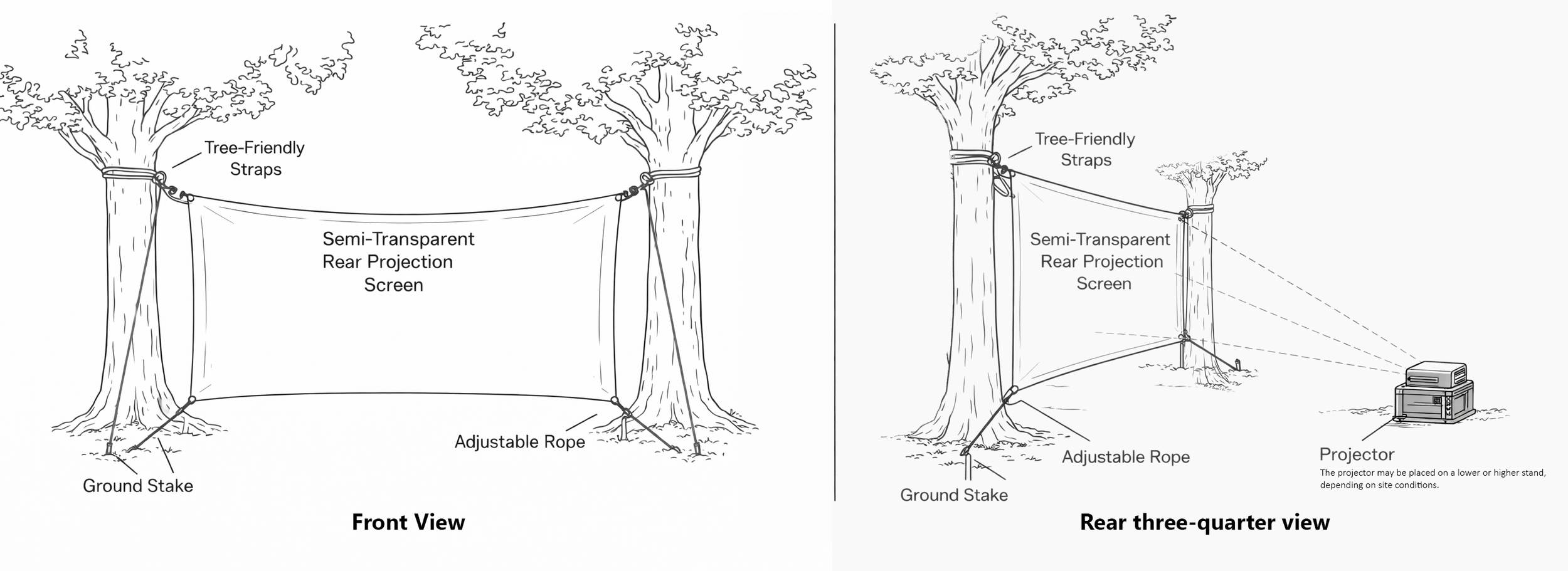

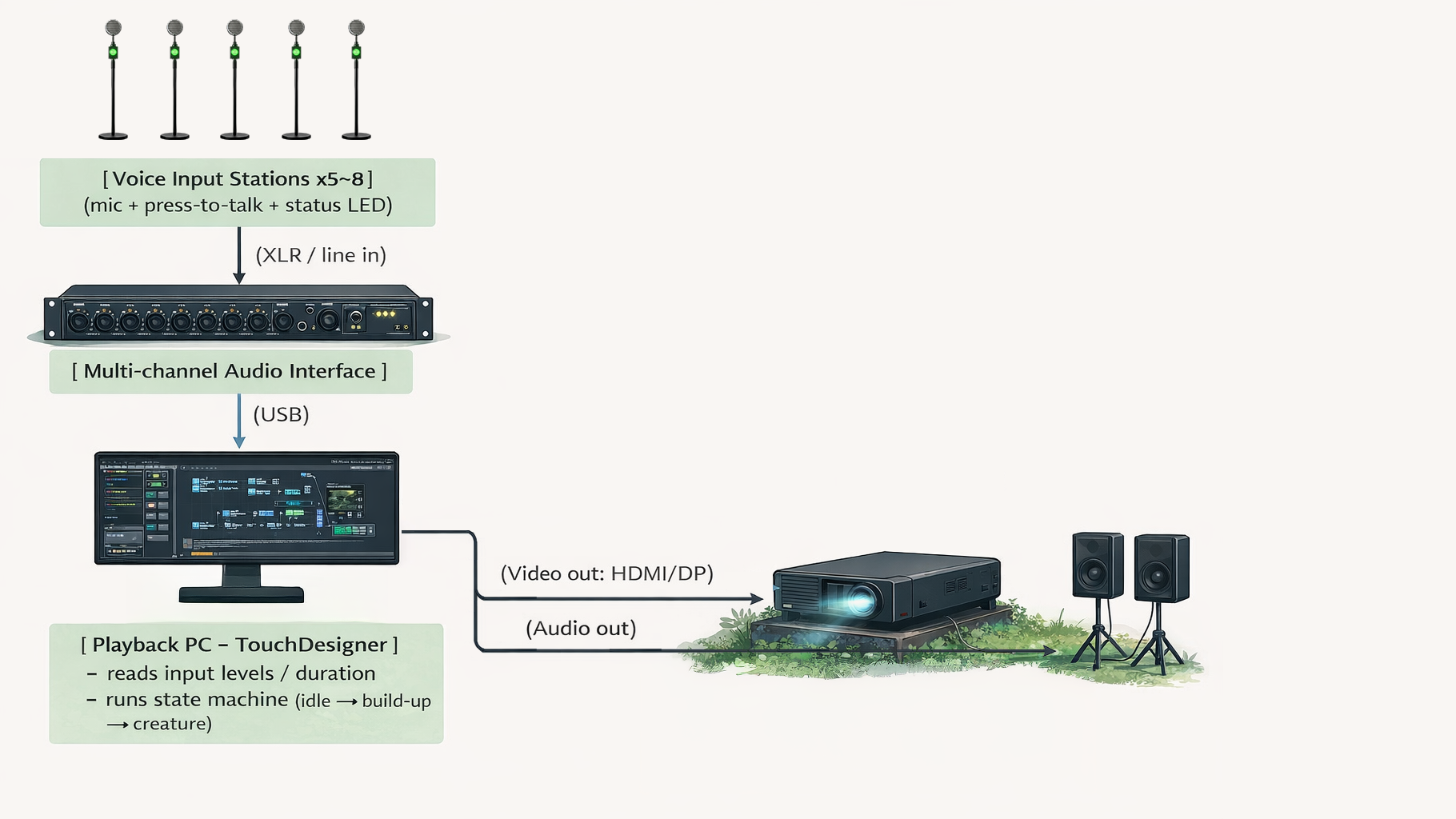

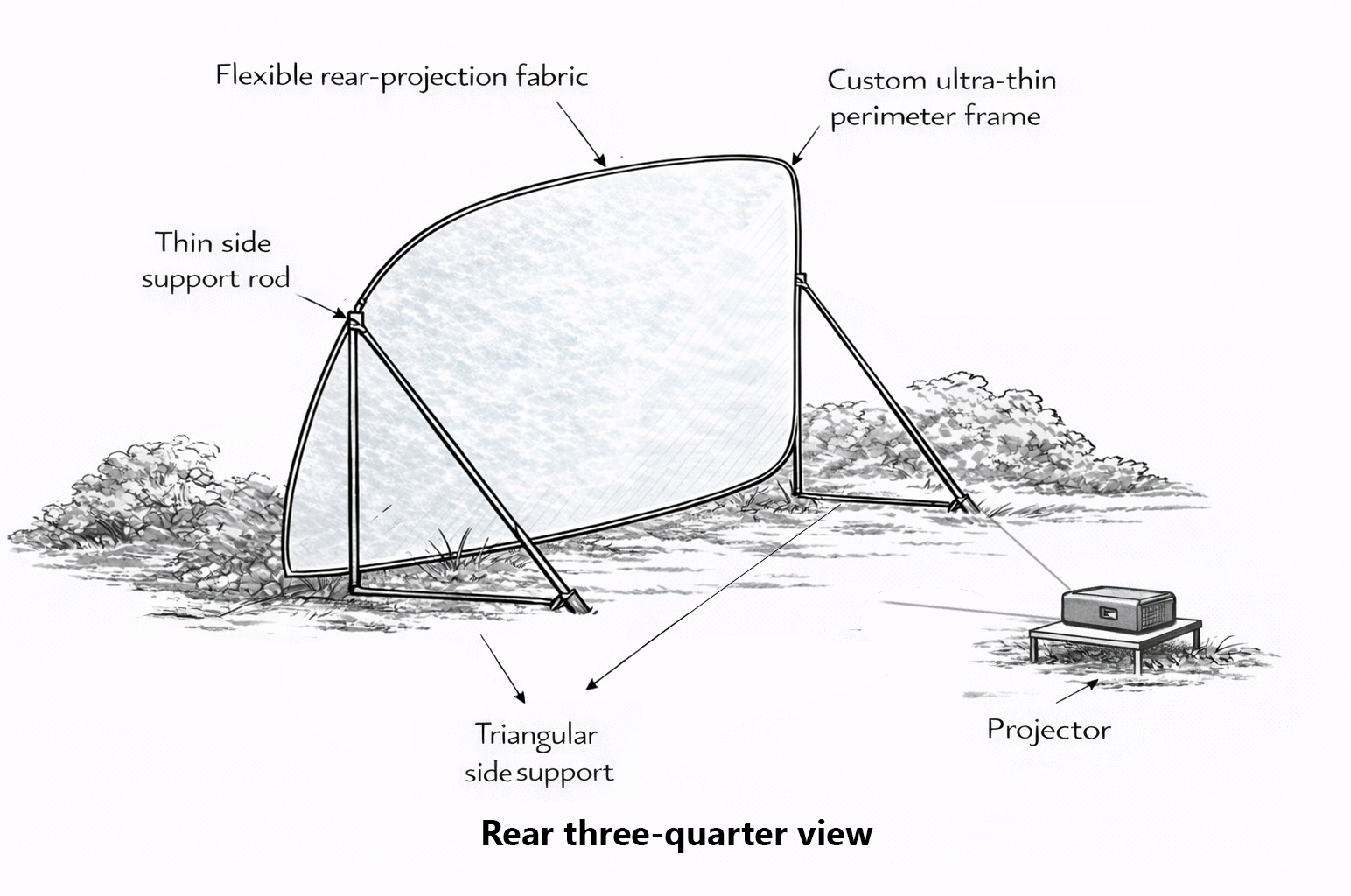

Unlike fully generative projects, these proposals required visual systems to conform to specific physical, architectural, and environmental constraints. As a result, AI generation operated as a modular and adaptive layer rather than a single unified output.

Initial concept imagery was produced using generative AI tools. These outputs were then refined through layered compositing, spatial re-framing, and targeted editing to align with site-specific conditions such as building façades, landscape contexts, and non-standard display surfaces.

Constraint-Driven Visual Design

In this workflow, visual quality is not defined by aesthetic appeal alone, but by feasibility within real spatial and environmental conditions.

This process allows AI-generated visuals to function not as speculative imagery, but as structurally viable and site-responsive proposal prototypes.