Rising River

A Personal Odyssey to Your Shadow | AI-driven Self-analysis VR experience | 2024

Rising River is an AI-mediated VR self-analysis experiment that borrows the language of therapy while quietly questioning it. Players board a small boat and sail into a cave to meet a giant fish embodying their shadow self; speaking in real time to the cave’s voice, they watch as a generative AI reshapes dialogue and environment into an interactive “mind cave” that mirrors—and subtly categorises—their inner landscape, raising questions about what it means to let a large language model script one’s most intimate reflections.

Exhibition Highlights:

Best XR+ Project – SXSW Sydney 2025, XR + Showcase

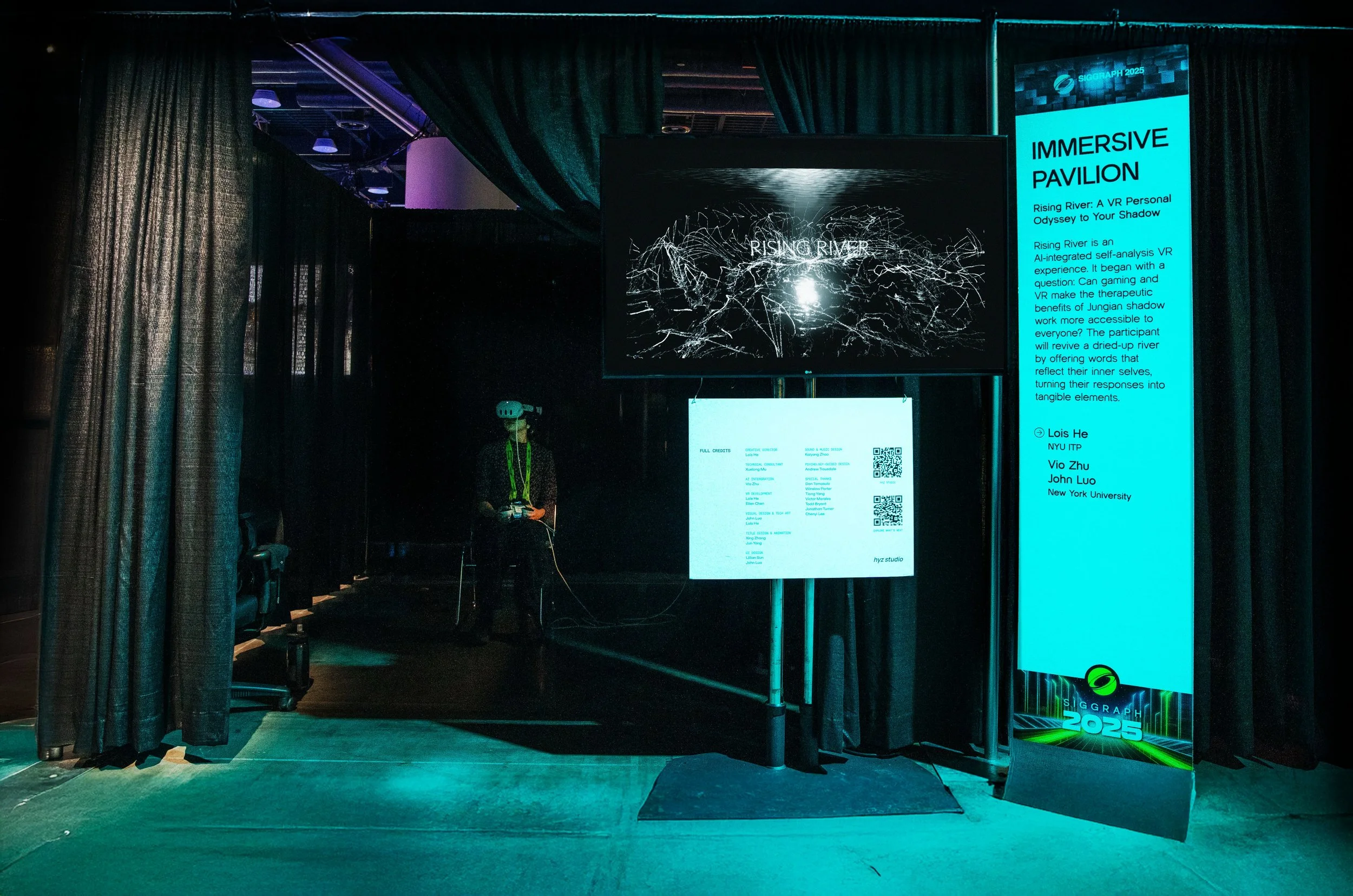

Official Selection – SIGGRAPH 2025 Immersive Pavilion, Vancouver, Canada

Official Selection – Arte Laguna Prize

The Art X Gallery Session at GEN-AI SUMMIT Silicon Valley 2024, sponsored by Microsoft, was held at the Palace of Fine Arts in San Francisco.

Artechouse, in New York, US. from January to April 2025. Screen-based Version,

LAMAMA Gallery Space, New York, May 2024 showcase.

The Holy Art Gallery, in Athens and London, June 2024.

CICA Museum, "Contemporary Landscape 2025", Korea, from January 8 to 26, 2025. Screen-based Version,

SIGGRAPH 2025 - IMMERSIVE Pavilion

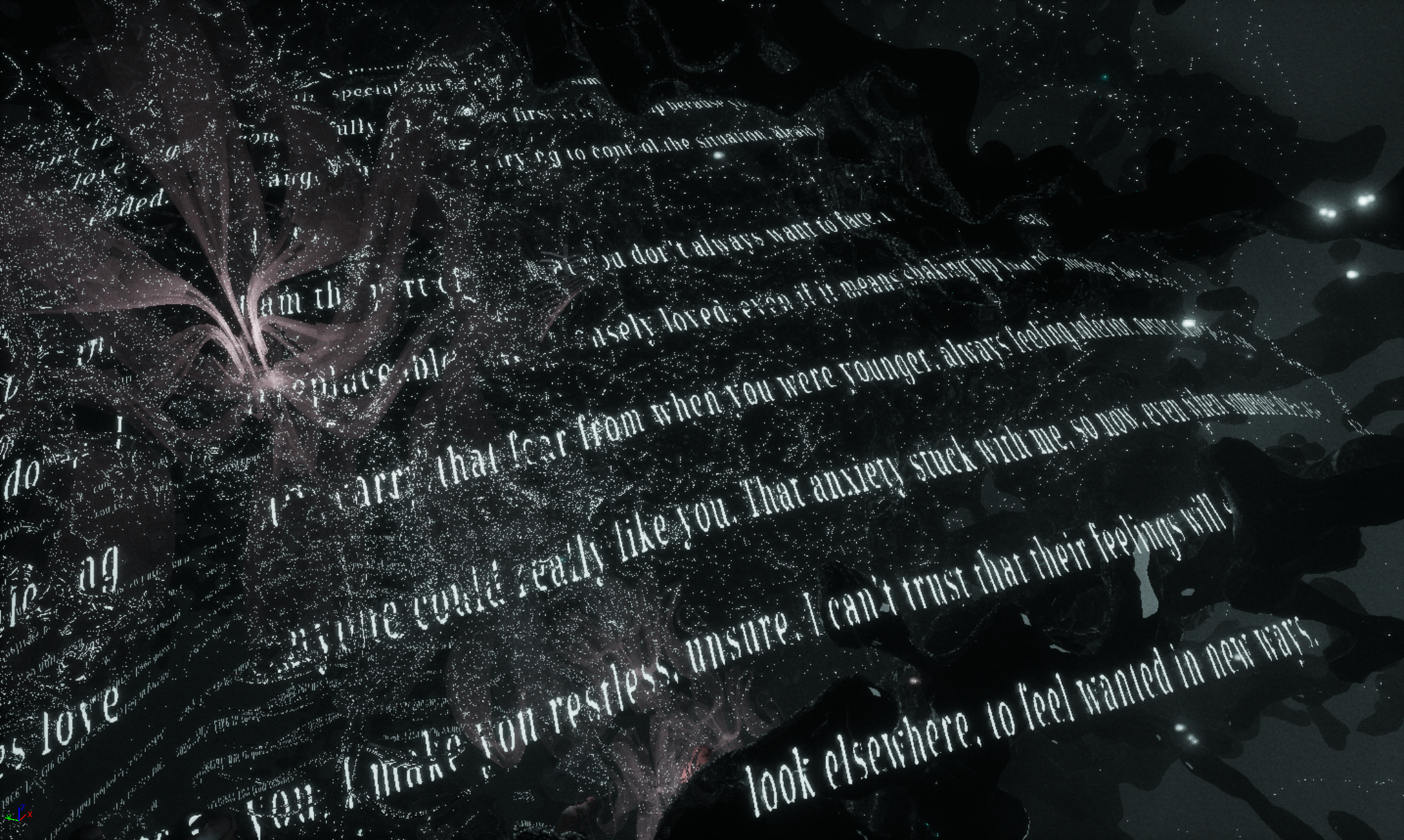

VR Experience Screenshot

SXSW Sydney 2025

Inspiration and Concept

The game integrates Jungian shadow theory and the VIA Character Strengths framework to explore how interactive media might open up shadow work to a wider audience — and what gets lost when psychological models are turned into systems. It began with a question that quickly split in two: Can gaming and virtual reality make the insights of Jungian shadow work more widely accessible, and what happens when those insights are filtered through game mechanics and AI?

At its core, Jung’s theory of the shadow suggests that each person carries a neglected, suppressed part of the self. Traditional shadow work creates a structured encounter with this inner figure. To translate that encounter into an interactive experience, we designed a process of self-reflection that brings the shadow into view while acknowledging players’ fears, motivations, desires and memories.

We treated this tension as material. Our working assumption was that the traits players mark as lesser strengths might point toward areas of insecurity or overlooked aspects of the self, while also showing how neatly a therapeutic framework can be captured by an interface. Generative AI is used to assemble a personalised narrative journey in a virtual cave, drawing on the player’s responses, but its voice is never neutral: it recombines patterns from its original training data and from our prompt design, echoing familiar scripts of guidance, care and self-improvement.

Through this embodied VR experience, participants are gently guided into self-exploration via a series of fixed and generative questions, boarding a small boat to revive a dried-up, dark river with words drawn from their inner life. Their responses become tangible elements in the world, but the piece also invites them to notice when the AI’s reflections feel uncannily accurate, when they feel generic or wrong, and what it means to outsource parts of introspection to a system that is always trying to sound therapeutic.

Rising River is not presented as treatment, but as an experiment in how AI, psychological frameworks and VR together reshape the way we talk to ourselves.

Revisiting the Experiment

Over time, the project also became a way to think through the limits of this approach. My original intention was quite sincere: to see whether an AI-supported form of self-dialogue could make shadow work feel more approachable and less intimidating. Early tests did produce moments of relief and recognition, but they also revealed how easily the system’s language slipped into generic encouragement, how quickly intimate struggles could be flattened into “traits” and “patterns”. Those mixed reactions are now part of the work: Rising River is as much about the unease of letting a model speak in a therapeutic voice as it is about the comfort of being listened to.

Technical Information

Platform: Unreal Engine 5

GPT API Integration: Generates dynamic questions and content using OpenAI's GPT, particularly for questions 5-7 and the shadow self revelation in the final scene outside the cave.

Prompt Engineering: Prompt design to help GPT understand player questions and frame responses in a coherent, meaningful direction.

Real-Time Speech-to-Text: Captures player responses and converts them into text for prompt processing.

Text-to-Speech: Converts GPT-generated responses into natural-sounding AI voices.

Credits

Creative Director: Lois He

Technical Consultant: Xuelong Mu

AI Integration: Vio Zhu

VR Development: Lois He, Ellen Chen

Visual Design: John Luo

Tech Art & Environment Art: John Luo, Lois He

UI Design: Lillian Sun, John Luo

Title Design & Animation: Xing Zhang, Jun Yang

Sound & Music Design: Kaiyang Zhao

Psychology-Guided Design: Andrew Trousdale

Special Thanks: Winslow Porter, Dan Tomasulo, Victor Morales, Jonathan Turner, Tianqi Yang, Todd Bryant, Chenyi Lee